GenAI-Powered Digital Threads Part 2 - A Novel Approach to AI Security

Updated March 24, 2024

In our previous blog post, we spoke about borrowing the concept of Digital Threads from the manufacturing world, in order to aggregate disparate company data into a single source––a knowledge graph. This knowledge graph can provide us with important security context through transparency and visibility of our organization's many different data sources, driving greater AI-powered security, risk management, and mitigation.

When we understand the source of risk, such as which repositories are actually running in production, or whether machines are exposed to the public web, we are better equipped to prioritize security risk and remediation for our organization. With alert fatigue growing across all engineering disciplines from DevOps to QA to Security, we need to work towards minimizing the noise and focusing on what really brings our organization value. This is the exact benefit that a human-language queryable graph database can deliver to our already bogged-down engineering teams.

In this post, we’ll dive into the technical specifics under the hood of the graph database, including:

- Graph database architecture

Tools that helped launch the application

Notebooks used to build the knowledge graph

GenAI model that enabled the querying through human language that also outputs results in human language

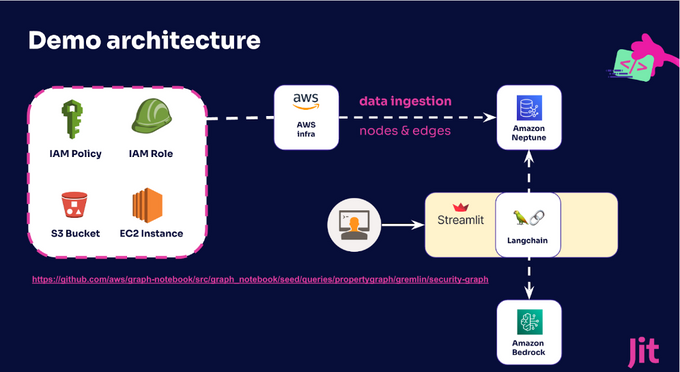

This technical example was built upon an AWS AI service suite to test its capabilities, and it was pretty impressive, with minimal learning curve for the AI enthusiast. This example leverages Neptune as the graph database, Bedrock’s Claude v3 for our GenAI model and LLM, along with out-of-the-box security notebooks, to populate the data. This coupled with excellent docs and some tinkering helped wire the example into common open-source tools like Langchain, and programming languages like openCypher to test out GenAI-powered context-based security in action.

The Graph Database Architecture

In this example, we start with a Github repository with two folders. You’ll find a Terraform file in one folder that enables you to bring up an end-to-end Neptune environment, which is particularly useful for those just getting started (as I had to learn to configure this from scratch). This Terraform will spin up a cluster, and an EC2 proxy to connect locally. Neptune comes packaged with several notebooks that also include data sources, where one such notebook is called “security graph”, which includes a dataset that was leveraged for this example.

The notebook is the basis from which the data is ingested into the graph. This enables you to query for all of the specific data available in the graph. A nifty feature is that inside the notebook you can also have the option to visualize the data as a graph, so you can understand the relationships between the data sources.

In this sample app and demo, one additional step was added, which was to add an EC2 instance into the same subnet, in order to be able to communicate with Neptune remotely, because by default it is inside the VPC and it is not otherwise accessible remotely. Once we have these tools and our cluster set up, it’s time to connect all of this to GenAI and see what happens.

Querying our Knowledge Graph

Open an SSH tunnel to connect to your Neptune cluster locally. For that use the address from the Terraform output and make sure to add it to your /etc/hosts file pointing to 127.0.0.1, using the following command:

ssh -i neptune.pem -L 8182:<db-neptune-instance-id>.us-east-1.neptune.amazonaws.com:8182 <EC2 Proxy IP>

Now it is possible to run the application. The application starts with a default question “Which machines do I have running in this data set?”

Behind the scenes, it runs the following Cypher query ‘MATCH m:`ec2:instance` RETURN m’. But how does it know to run this specific query? The database schema is included in the question, which is then converted into a Cypher query. Not only will you see the data output as JSON in the CLI, but if you return to the application, you will also see the generated response in natural human language.

And this is just one example. Eventually it is possible to query the application and receive a relevant response to any data points available in the knowledge graph. So if we were to take a security example, and how we can achieve better AI-based security for our systems, we can find out if any of these machines have any public IPs or get a list of all the types of cloud resources we have in our stack (an inventory), which it will be able to aggregate based on the node labels –– which is essentially a list of all of the different object types we have in our graph.

A Word on Limitations & Good Practices for Optimal Results

When I first started playing around with this stack testing different AI models, it worked well until one of the queries was too large (meaning the prompt that includes the graph database schema exceeded the context window), and the library threw an error. It also happened to me with some other questions I threw to the engine and the resulting OpenCypher query was incorrect (syntax error). This brings up the question of which model should we use?

There are several models, but not all work in the same way. In this example, we leveraged Bedrock and compared several models. Claude 3 delivered the best results for this example, as did GPT-4 from OpenAI. So if you want to get started and play around, these are the two recommended models. Benchmarking and fine-tuning the AI models is a must as some will perform better than others based on your use case.

In the library itself, it’s possible to play around with a diversity of parameters, including the type of prompts sent to the engine, where a certain amount of fine-tuning will certainly impact the quality of the results.

In addition, the more you enrich the graph with data, the richer queries you’ll be able to get, and the more value you’ll receive from each query. By connecting the graph to third-party resources, you can receive additional business context. For example, being able to query an HR system about active employees, and see who still has access to different cloud resources.

AWS Neptune + Bedrock for Better AI Security

In this example, we demonstrated how with a simple AWS-based stack of Neptune and Bedrock, leveraging common OSS libraries like Langchain and Streamlit, it was possible to build a knowledge graph that simulates the same value as a digital thread in manufacturing. By consolidating disparate organizational data into a single graph database, we can then leverage the power of GenAI to query this data, receive accurate results based on this important organizational and system context, and all in human language to minimize the learning curve of acquiring a new syntax or analyzing complex raw JSON results.

The more we converge data, and are able to visualize the relationship between the different data sources, the more we can have a rapid understanding of issues when they arise - from breaches to outages. This has immense benefits for engineering, security, and operations alike, enabling us to have a richer context when it comes to the root cause with greater data and context-driven visibility into our systems.